Pixels, Pictures, and What Computers See

A digital image is not magic. It is a grid of numbers. Each number represents one pixel with color and brightness values.

Imagine graph paper filled with tiny boxes. Each box holds three numbers—red, green, and blue—ranging from 0 to 255. Together these numbers recreate a scene.

So the pixel at row 10, column 20 might be 120, 200, 80. Your computer sees that as light green. Combine millions of such boxes and you get a clear photo.

When you snap a selfie, your phone stores every pixel in order. Opening the file tells the device which color goes in each box.

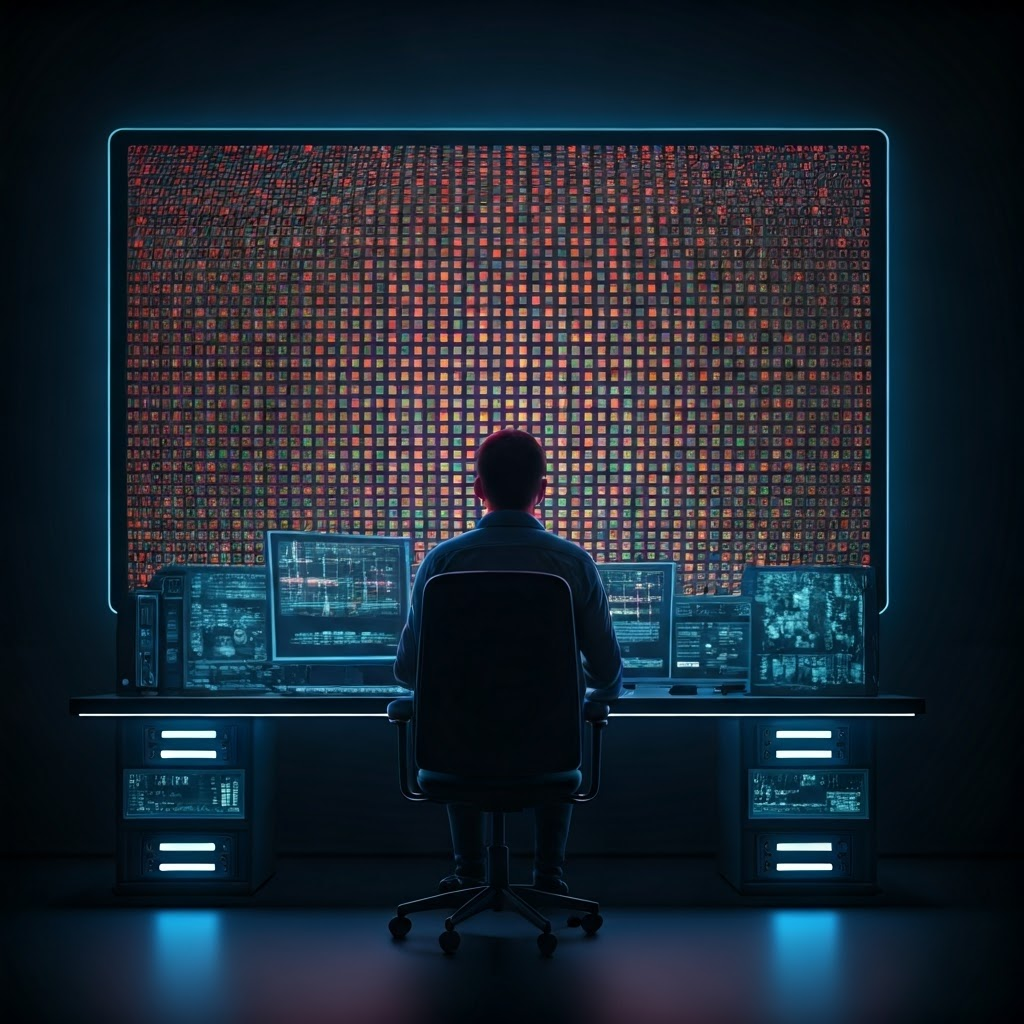

Zoom far enough and you notice blocky squares—the raw pixels. Computers never see the finished picture, only rows of numbers.

This numeric view is the starting point for computer-vision systems that turn pixels into meaning.

From Images to Ideas: The Main Computer Vision Tasks

Suppose you want to group vacation shots. Image-classification answers, “What is in this picture?” It labels each photo as beach, city, or friends by learning pixel patterns.

An app that adds hats needs exact positions. Object-detection finds where items are and draws rectangles around them—one box per face.

To blur a background, your phone must know which pixels belong to you. Segmentation assigns every pixel to cat, dog, or backdrop, giving the finest detail.

Classification, detection, and segmentation each reveal more context. They let software unlock phones, sort albums, and guide self-driving cars.

The Magic of Convolutional Neural Networks

How do computers learn patterns in millions of numbers? Convolutional-neural-networks (CNNs) solve this by scanning small windows across an image.

The first CNN layer detects simple edges or blobs. Each filter slides over every position, checking for basic shapes.

Higher layers combine earlier findings into bigger shapes—circles, corners, or textures. Stack enough layers and the network identifies whole objects, even dog breeds.

CNNs adjust their filters through training. Show thousands of labeled photos and the model tweaks itself until it spots the right features.

Before CNNs, engineers wrote fragile rules for every object. Now data teaches the system, making vision tasks scalable and robust.

Why All This Matters

Knowing that images are just numbers changes how you view privacy. When an app recognizes your face, it matches patterns in pixel grids—not the real you.

So if your phone greets you or confuses your dog with the neighbor’s, remember: it is only crunching numbers and learning from examples.