How Machines Learn to Talk: The Basics of LLMs

What Is a Large Language Model?

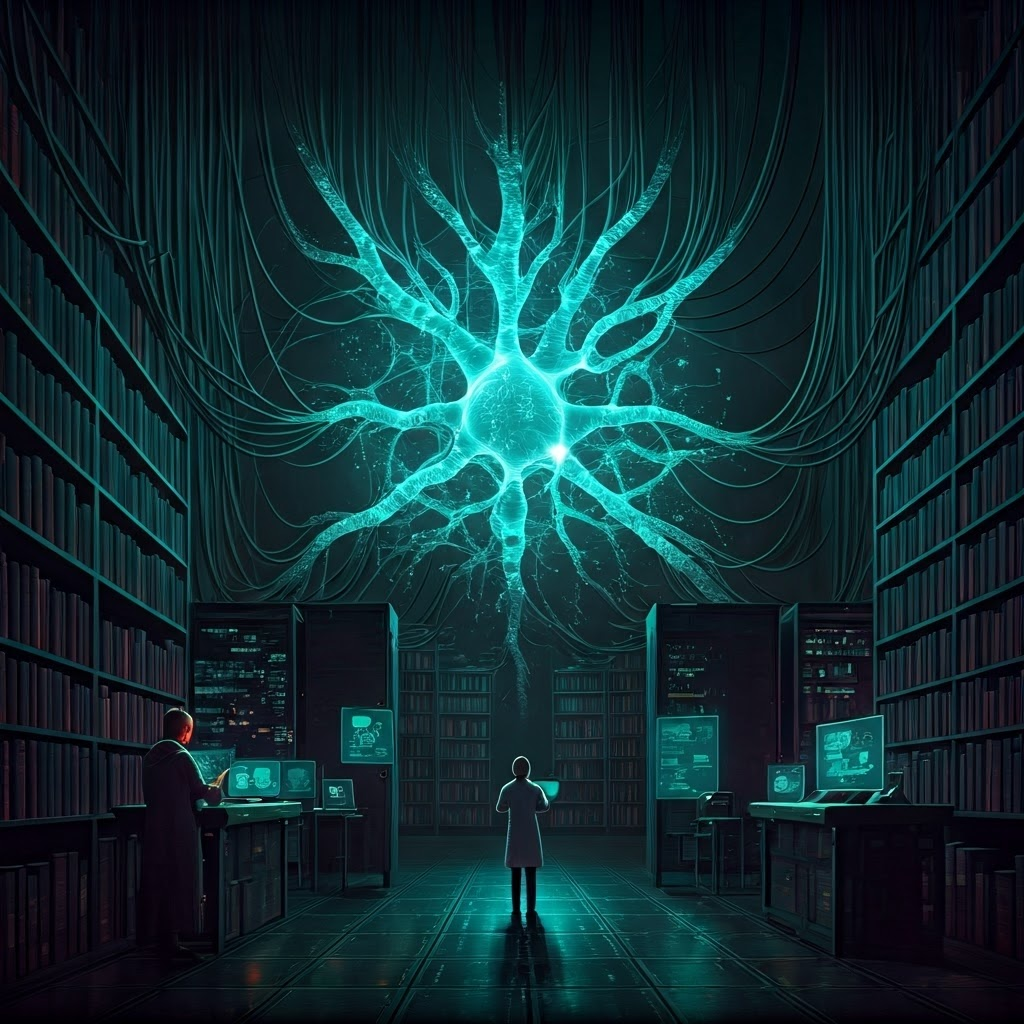

Large language models learn language by reading massive amounts of text. They fine-tune billions of internal parameters as they train. Imagine a virtual brain built from math that predicts the next word, answers questions, or finishes stories. The more data it sees, the more human it sounds.

“Large” refers to wide experience and strong computing muscle, not physical size. Smaller models handle simple tasks like spelling correction. An LLM can write poems, translate languages, or explain physics. Its power demands vast data and energy, yet that cost unlocks the richness and quirks of human language.

Tokens, Embeddings, and the Language Puzzle

Computers split text into small tokens. “I’m learning fast!” becomes [I] [’m] [learning] [fast] [!]. Tokens can be words, sub-words, or punctuation. This flexible split lets the model digest any text, even typos or invented words.

Each token turns into a numeric vector called an embedding. Think of embeddings as coordinates on a language map. Similar words sit close together: “cat” and “kitten” cluster, while “cat” and “volcano” stay far apart. Context also shifts positions, so “hot dog” signals food, not weather.

The Transformer: The Engine Under the Hood

The transformer design lets the model view a whole sentence at once. Older models read step by step and struggled with long ideas. Transformers changed that by letting words decide which other words deserve attention.

This focus is called attention. In “She saw the man with the telescope,” attention helps the model test every word to grasp the right meaning. Picture a team of readers, each tracking a specific link in the sentence.

Transformers stack many layers. Lower layers spot simple pairs like “green apple.” Higher ones catch grammar or style, such as riddling speech. Each layer passes insights up and down, sharpening the model’s final output.

Transformers handle language at scale. They train quickly, adapt well, and learn from vast data. Since the 2017 paper “Attention Is All You Need,” transformers have become the standard engine for LLMs.

Why Attention Feels Like Human Understanding

Attention makes transformers feel more human. Your mind jumps through a sentence, linking ideas near and far. Transformers mimic that jump, so they catch context, double meanings, and wordplay. Tokens turn into numbers, embeddings map meaning, and layers of attention weave it all together—like tireless, organized note-takers who never sleep.