Machines That See: How AI Spots What Humans Miss

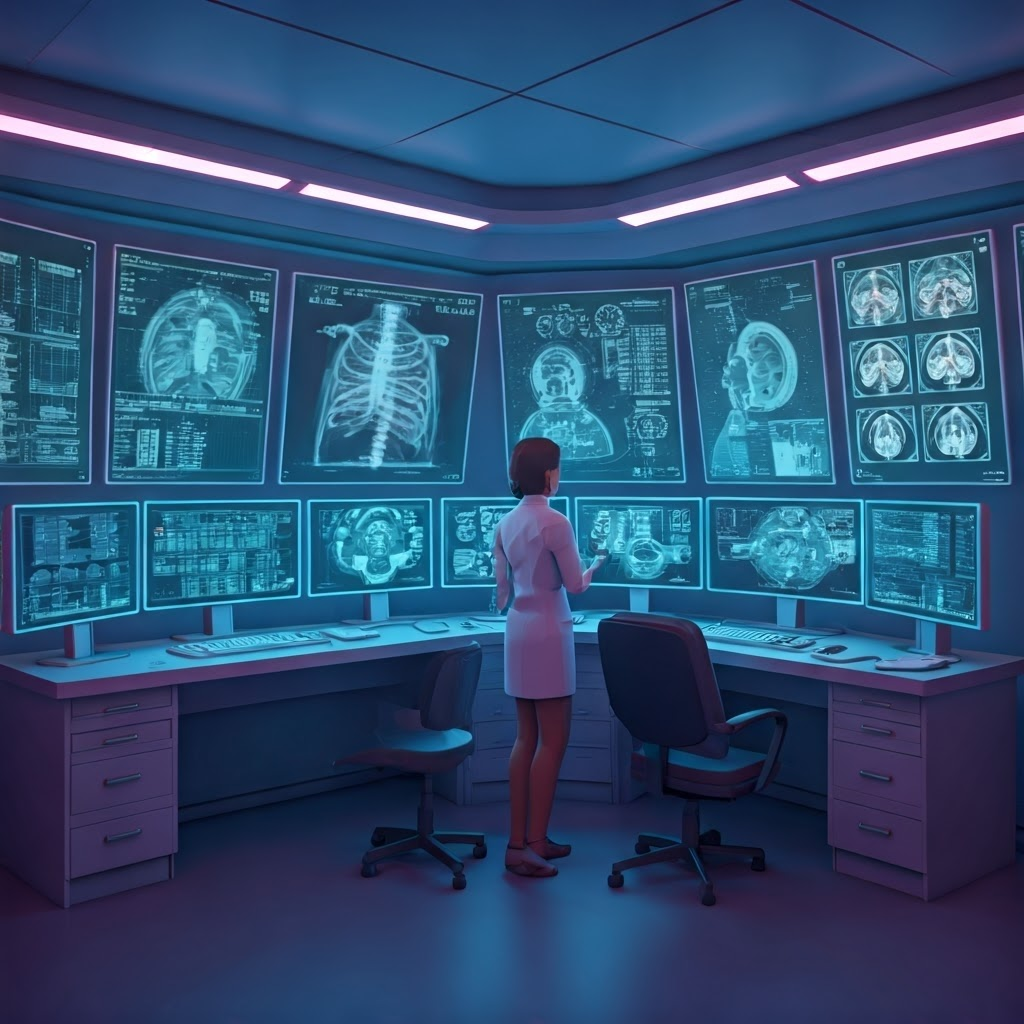

The New Eyes in Medicine

Imagine walking into a high-tech x-ray room. A digital assistant reviews your scan in seconds and nudges the doctor to zoom in on a tiny spot the human eye might skip. This partnership is changing how quickly—and how accurately—problems get spotted.

AI now works like a tireless teammate with a perfect memory. It reviews thousands of X-rays, MRIs, and slides, flagging subtle details humans may overlook. By training on vast labeled datasets, the system learns to catch early hints of trouble—sometimes outpacing even top specialists on rare or faint conditions.

The real power lies in pattern-recognition. For diabetic retinopathy, a model from Google screens eye photos in seconds, pushing only tricky cases to doctors. The goal isn’t to replace experts but to handle the heavy lifting, sorting clear-cut images from the uncertain so clinicians focus where they’re needed most.

From Pixels to Answers

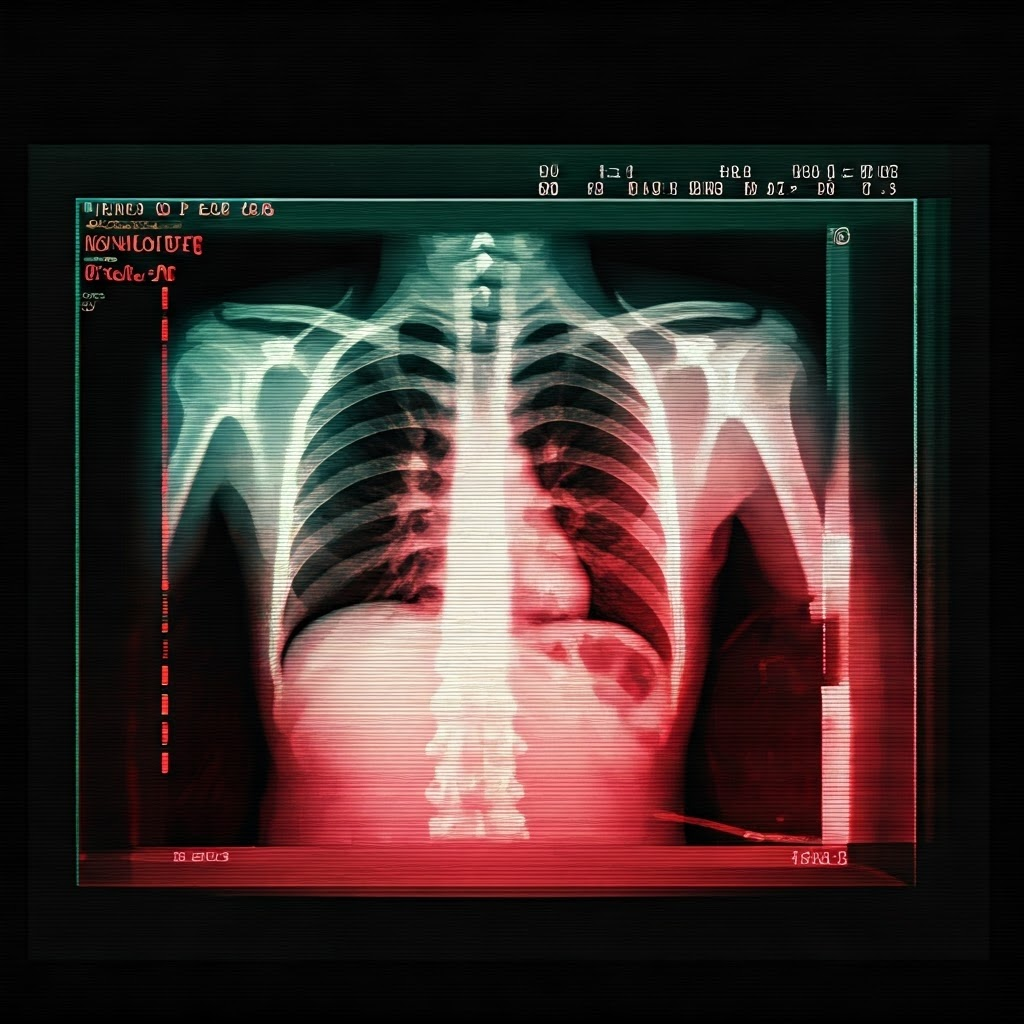

Turning a medical image into insight feels like a relay race. A tech captures a CT scan made of millions of pixels. The AI receives these pixels and, much like searching a crowd, looks for mathematical similarities to images it has already learned. Each step leads smoothly to the next.

As it analyzes, the system assigns probabilities to findings. A bright spot might be 93 percent harmless, 7 percent infection. It flags the image, and the doctor decides if further tests are needed. This process often finishes in seconds, an advantage in busy hospitals short on specialists.

Stanford researchers built an AI that screens X-rays for over a dozen issues—pneumonia, collapsed lung, heart enlargement—before the radiologist even sits down. Patients receive faster answers, and critical findings are less likely to slip through the cracks.

Sometimes, AI uncovers unexpected links. Cancer slide models can hint at how aggressive a tumor might be or which treatment could work. The computer doesn’t “understand” cancer, but after seeing more samples than any human lifetime allows, it detects patterns no one noticed before.

When AI Gets It Wrong

Even cutting-edge systems stumble. Early models mislabeled images simply because certain hospitals saw sicker patients. Blurry scans, odd lighting, or rare diseases can trip them up. These errors remind us why oversight matters.

Doctors remain the final check. If AI flags a tumor, the physician reviews the image, weighs patient history, and orders more tests if needed. Each mistake becomes new training data, steadily improving the model—much like teaching a child not to repeat the same slip-up.

Invisible Help, Lasting Impact

Good imaging AI stays in the background yet catches disease early, identifies strokes within minutes, and flags rare infections before they spread. It’s a tool, powerful but dependent on the data it learns from and the humans who guide it.

Some cases still demand a human’s experience or empathy—a careful conversation about a puzzling spot on a scan. Machines remember and sort patterns; people understand, decide, and care. Working together, they make healthcare faster, safer, and more personal.